Journal

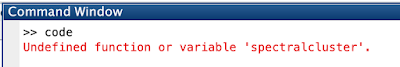

On Tuesday, March 24th, we had a meeting with Professor Hassibi over Zoom. Prior to our talk, we had issues with our code regarding the fact that it didn't produce the results expected. He told us to find and graph the eigenvalues of the graph Laplacian, as that would indicate the number of clusters found and value you can refer other datapoints to. He noted that graphing the corresponding eigenvector would indicate whether a cluster had been isolated or not. On that note, it was up to us to tinker with values for a bit and try different algorithms like K-means. Below is a video of the meeting with Professor Hassibi: This week has been very interesting, to say the least. As you may know, COVID-19 has just taken the U.S. for a turn and has put a pause on daily life, forcing businesses to adapt, overcome, or fail in some cases. For us high schoolers, classes have migrated to the Zoom platform. With it followed teachers with bad internet service, leading to indecipherable dialect